AAudio is an audio API introduced in the Android 8.0 release. The Android 8.1 release has enhancements to reduce latency when used in conjunction with a HAL and driver that support MMAP. This document describes the hardware abstraction layer (HAL) and driver changes needed to support AAudio's MMAP feature in Android.

Support for AAudio MMAP requires:

- reporting the MMAP capabilities of the HAL

- implementing new functions in the HAL

- optionally implementing a custom ioctl() for the EXCLUSIVE mode buffer

- providing an additional hardware data path

- setting system properties that enable MMAP feature

AAudio architecture

AAudio is a new native C API that provides an alternative to Open SL ES. It uses a Builder design pattern to create audio streams.

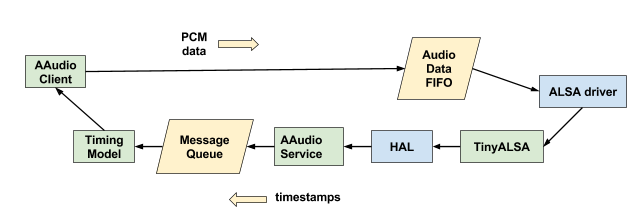

AAudio provides a low-latency data path. In EXCLUSIVE mode, the feature allows client application code to write directly into a memory mapped buffer that is shared with the ALSA driver. In SHARED mode, the MMAP buffer is used by a mixer running in the AudioServer. In EXCLUSIVE mode, the latency is significantly less because the data bypasses the mixer.

In EXCLUSIVE mode, the service requests the MMAP buffer from the HAL and manages the resources. The MMAP buffer is running in NOIRQ mode, so there are no shared read/write counters to manage access to the buffer. Instead, the client maintains a timing model of the hardware and predicts when the buffer will be read.

In the diagram below, we can see the Pulse-code modulation (PCM) data flowing down through the MMAP FIFO into the ALSA driver. Timestamps are periodically requested by the AAudio service and then passed up to the client's timing model through an atomic message queue.

In SHARED mode, a timing model is also used, but it lives in the AAudioService.

For audio capture, a similar model is used, but the PCM data flows in the opposite direction.

HAL changes

For tinyALSA see:

external/tinyalsa/include/tinyalsa/asoundlib.h external/tinyalsa/include/tinyalsa/pcm.c

int pcm_start(struct pcm *pcm); int pcm_stop(struct pcm *pcm); int pcm_mmap_begin(struct pcm *pcm, void **areas, unsigned int *offset, unsigned int *frames); int pcm_get_poll_fd(struct pcm *pcm); int pcm_mmap_commit(struct pcm *pcm, unsigned int offset, unsigned int frames); int pcm_mmap_get_hw_ptr(struct pcm* pcm, unsigned int *hw_ptr, struct timespec *tstamp);

For the legacy HAL, see:

hardware/libhardware/include/hardware/audio.h hardware/qcom/audio/hal/audio_hw.c

int start(const struct audio_stream_out* stream); int stop(const struct audio_stream_out* stream); int create_mmap_buffer(const struct audio_stream_out *stream, int32_t min_size_frames, struct audio_mmap_buffer_info *info); int get_mmap_position(const struct audio_stream_out *stream, struct audio_mmap_position *position);

For HIDL audio HAL:

hardware/interfaces/audio/2.0/IStream.hal hardware/interfaces/audio/2.0/types.hal hardware/interfaces/audio/2.0/default/Stream.h

start() generates (Result retval);

stop() generates (Result retval) ;

createMmapBuffer(int32_t minSizeFrames)

generates (Result retval, MmapBufferInfo info);

getMmapPosition()

generates (Result retval, MmapPosition position);Report MMAP support

System property "aaudio.mmap_policy" should be set to 2 (AAUDIO_POLICY_AUTO) so the audio framework knows that MMAP mode is supported by the audio HAL. (see "Enabling AAudio MMAP Data Path" below.)

audio_policy_configuration.xml file must also contain an output and input profile specific to MMAP/NO IRQ mode so that the Audio Policy Manager knows which stream to open when MMAP clients are created:

<mixPort name="mmap_no_irq_out" role="source"

flags="AUDIO_OUTPUT_FLAG_DIRECT|AUDIO_OUTPUT_FLAG_MMAP_NOIRQ">

<profile name="" format="AUDIO_FORMAT_PCM_16_BIT"

samplingRates="48000"

channelMasks="AUDIO_CHANNEL_OUT_STEREO"/>

</mixPort>

<mixPort name="mmap_no_irq_in" role="sink" flags="AUDIO_INPUT_FLAG_MMAP_NOIRQ">

<profile name="" format="AUDIO_FORMAT_PCM_16_BIT"

samplingRates="48000"

channelMasks="AUDIO_CHANNEL_IN_STEREO"/>

</mixPort>Open and close an MMAP stream

createMmapBuffer(int32_t minSizeFrames)

generates (Result retval, MmapBufferInfo info);The MMAP stream can be opened and closed by calling Tinyalsa functions.

Query MMAP position

The timestamp passed back to the Timing Model contains a frame position and a MONOTONIC time in nanoseconds:

getMmapPosition()

generates (Result retval, MmapPosition position);The HAL can obtain this information from the ALSA driver by calling a new Tinyalsa function:

int pcm_mmap_get_hw_ptr(struct pcm* pcm,

unsigned int *hw_ptr,

struct timespec *tstamp);File descriptors for shared memory

The AAudio MMAP data path uses a memory region that is shared between the hardware and the audio service. The shared memory is referenced using a file descriptor that is generated by the ALSA driver.

Kernel changes

If the file descriptor is directly associated with a

/dev/snd/ driver file, then it can be used by the AAudio service in

SHARED mode. But the descriptor cannot be passed to the client code for

EXCLUSIVE mode. The /dev/snd/ file descriptor would provide too

broad of access to the client, so it is blocked by SELinux.

In order to support EXCLUSIVE mode, it is necessary to convert the

/dev/snd/ descriptor to an anon_inode:dmabuf file

descriptor. SELinux allows that file descriptor to be passed to the client. It

can also be used by the AAudioService.

An anon_inode:dmabuf file descriptor can be generated using the

Android Ion memory library.

For additional information, see these external resources:

- "The Android ION memory allocator" https://lwn.net/Articles/480055/

- "Android ION overview" https://wiki.linaro.org/BenjaminGaignard/ion

- "Integrating the ION memory allocator" https://lwn.net/Articles/565469/

HAL changes

The AAudio service needs to know if this anon_inode:dmabuf is

supported. Before Android 10.0, the only way to do that was to pass the size of the MMAP

buffer as a negative number, eg. -2048 instead of 2048, if supported. In Android 10.0 and later

you can set the AUDIO_MMAP_APPLICATION_SHAREABLE flag.

mmapBufferInfo |= AUDIO_MMAP_APPLICATION_SHAREABLE;

Audio subsystem changes

AAudio requires an additional data path at the audio front end of the audio subsystem so it can operate in parallel with the original AudioFlinger path. That legacy path is used for all other system sounds and application sounds. This functionality could be provided by a software mixer in a DSP or a hardware mixer in the SOC.

Enable AAudio MMAP data path

AAudio will use the legacy AudioFlinger data path if MMAP is not supported or fails to open a stream. So AAudio will work with an audio device that does not support MMAP/NOIRQ path.

When testing MMAP support for AAudio, it is important to know whether you are actually testing the MMAP data path or merely testing the legacy data path. The following describes how to enable or force specific data paths, and how to query the path used by a stream.

System properties

You can set the MMAP policy through system properties:

- 1 = AAUDIO_POLICY_NEVER - Only use legacy path. Do not even try to use MMAP.

- 2 = AAUDIO_POLICY_AUTO - Try to use MMAP. If that fails or is not available, then use legacy path.

- 3 = AAUDIO_POLICY_ALWAYS - Only use MMAP path. Do not fall back to legacy path.

These may be set in the devices Makefile, like so:

# Enable AAudio MMAP/NOIRQ data path. # 2 is AAUDIO_POLICY_AUTO so it will try MMAP then fallback to Legacy path. PRODUCT_PROPERTY_OVERRIDES += aaudio.mmap_policy=2 # Allow EXCLUSIVE then fall back to SHARED. PRODUCT_PROPERTY_OVERRIDES += aaudio.mmap_exclusive_policy=2

You can also override these values after the device has booted. You will need to restart the audioserver for the change to take effect. For example, to enable AUTO mode for MMAP:

adb root

adb shell setprop aaudio.mmap_policy 2

adb shell killall audioserver

There are functions provided in

ndk/sysroot/usr/include/aaudio/AAudioTesting.h that allow you to

override the policy for using MMAP path:

aaudio_result_t AAudio_setMMapPolicy(aaudio_policy_t policy);

To find out whether a stream is using MMAP path, call:

bool AAudioStream_isMMapUsed(AAudioStream* stream);